The Good of Event Sourcing - Conflict Handling, Replication and Domain Evolution

Event Sourcing is a brilliant solution for high-performance or complex business systems, but you need to be aware that this also introduces challenges most people don't tell you about. In @June, I already blogged about the things I would do differently next time. But after attending another introductionary presentation about Event Sourcing recently, I realized it is time to talk about some real experiences. So in this multi-part post, I will share the good, the bad and the ugliness to prepare you for the road ahead. After talking about the awesomeness of projections last time, let’s talk about some more goodness.

Conflict handling becomes a business concern

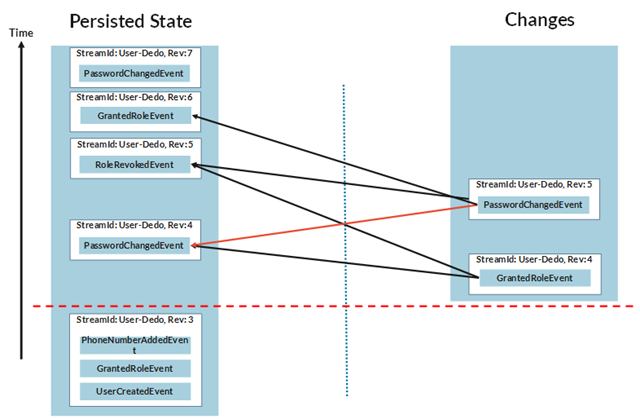

Many modern systems are build with optimistic concurrency as underlying concurrency principle. So as long as two or more people are not doing things on the same domain object, everything is fine. But if they do, then the first one that manages to get its changes handled by the domain wins. When the number of concurrent users is low this is usually fine, but in a highly concurrent website such as an online bookstore like Amazon, this is going to fall apart very quickly. At Domain-Driven Design Europe, Udi Dahan mentioned a strategy in which completing an order for a book would never fail, even if you're out of books. Instead, he would hold the order for a certain period of time to see if new stock could be used to fulfil the order after all. Only after this time expires, he would send an email to the customer and reimburse the money. Most systems don't need this kind of sophistication, but event sourcing does offer a strategy that allows you to have more control on how conflicts should be handled; event merging. Consider the below diagram.

The red line denotes the state of the domain entity user with ID User-Dedo as seen by two concurrent people working on this system. First, the user was created, then a role was granted and finally his or her phone number was added. Considering there were three events at the time the this all started, the revision was 3. Now consider those two people doing administrative work and thereby causing changes without knowing about that. The left side of the diagram depicts one of them changing the password and revoking a role, whereas the right side shows another person granting a role and changing the password as well.

When the time comes to commit the changes of the right side into the left side, the system should be able to detect that the left side already has two events since revision 3 and is now at revision 5. It then needs to cross-reference each of these with the two events from the right side to see if there are conflicts. Is the GrantedRoleEvent from the right side in conflict with the PasswordChangedEvent on the left? Probably not, so we append that event to the left side. But who are we to decide? Can we take decisions like these? No, and that's a good thing. It’s the job of the business to decide that. Who else is better at understanding the domain?

Continuing with our event merging process, let's compare that GrantedRoleEvent with the RoleRevokedEvent on the left. If these events were acting on the same role, we would have to consult the business again. But since we know that in this case they dealt different roles, we can safely merge the event into the left side and give it revision 6. Now what about those attempts to change the passwords at almost the same time? Well, after talking to our product owner, we learned that taking the last password was fine. The only caveat is that the clock of different machines can vary up to five minutes, but the business decided to ignore that for now. Just imagine if you would let the developers make that decision. They would probably introduce a very complicated protocol to ensure consistency whatever the cost….

Distributed Event Sourcing

As you know, an event store contains an immutable history of everything that ever happened in the domain. Also most (if not all) event store implementations allow you to enumerate the store in order of occurrence starting at a specific point in time. Usually, those events are grouped in functional transactions that represent the events that were applied on the same entity at the same point in time. NEventStore for example, calls these commits and uses a checkpoint number to identify such a transaction. With that knowledge, you could imagine that an event store is a great vehicle for application-level replication. You replicate one transaction at the time and use the checkpoint of that transaction as a way to continue in case the replication process gets interrupted somehow. The diagram below illustrates this process.

As you can see, the receiving side only has to append the incoming transactions on its local event store and ask the projection code to update its projections. Pretty neat, isn't it. If you want, you can even optimize the replication process by amending the transactions in the event store with metadata such as a partitioning key. The replication code can use that information to limit the amount of the data that needs to be replicated. And what if your event store has the notion of archivability? In that case, you can even exclude certain transactions entirely from the replication process. The options you have are countless.

But if the replication process involves many nodes, a common problem is that you need to make sure that the code used by all these nodes is in sync. However, using the event store as the vehicle for this enables another trick: staggered roll-outs. Since events are immutable, any change in the event schema requires the code to keep supporting every possible past version of a certain event. Most implementations use some kind of automatic event up-conversion mechanism that enables the domain to only need to support the latest version. But because of that, it becomes completely feasible for a node that is running a newer version of the code to keep receiving events from an older node. It will just convert those older events in whatever it expects and continue the projection process. It will be a bit more challenging, but with some extra work you could event support the opposite. The older node would still store the newer events, but hold off the projection work until it has been upgraded to the correct version. Nonetheless, upgrading individual nodes probably already provides sufficient flexibility.

Keeping your domain and the business aligned

So you've been doing Event Storming, domain modeling, brain storming and all other techniques that promise you a chance to peek into the brains of those business people. Then you and your team go off to build an awesome domain model from one of the identified bounded contexts and everybody is happy. Then, after a certain period of production happiness, a new release is being build and you and the developers discover that you got the aggregate boundaries all wrong. You simply can't accommodate the new invariants in your existing aggregate roots. Now what? Build a new system? Make everything eventual consistent and introduce process managers and sagas to handle those edge cases? You could, but remember, your aggregates are dehydrated from the event store using the events. So why not just redesign or refactor your domain instead? Just split that big aggregate into two or more, convert that entity into a value object, or merge that weird value object into an existing entity. Sure, you would need more sophisticated event converter that know how to combine or split one or more event streams, but it surely cheaper than completely rebuilding your system…..

Now if you were not convinced yet after reading my last post, are you now? Do you see other cool things you could do? Or do you have any concerns to share? I'd love to hear about that by commenting below. Oh, and follow me at @ddoomen to get regular updates on my everlasting quest for a better architecture style.

Aviva Solutions

Aviva Solutions

Fluent Assertions

Fluent Assertions

Leave a Comment